It’s always been a problem of mine to understand what code coverage reports want to tell me. Couldn’t wrap my head around this one particular number they come up with, the percentage of code covered with tests. Is the current percentage ok? Should it be higher? Is 80% enough or should I do something to reach magical 100%? I had to find answer to those questions while working on major refactor of a system. The problem was, is the coverage good enough to give green light the refactor.

To answer the question I decided to first look at the coverage from the very most low level. Looked at the lines that are and aren’t covered. Driving the analysis with risk. Assume that all types of automated regression tests you have in your project cover 80% of your production code. This coverage is assured by unit tests, API level tests, webdriver tests and other system level tests. Besides knowing 80% of code is covered with a test, the number also tells you that 20% of your code is missing any test. Now switch from percentage to number of lines. In my case, the system we were about to refactor consisted of over million of lines of code. One fifth of a million is a lot of code missing coverage… a lot… but… not every line is equal in value and thus equal in risk. So what was the value and risk associated with each line missing coverage?

One way of looking at risk is by the probability of something unwanted occurring multiplied by the impact it causes. Missing test for a line of code is definitely a risk. Nothing will tell you when the line is wrongly modified and that the modification is going to cause a bug in production. You may ask, how likely it’s bug happens for particular line of code, and if a bug slips what does it mean to your end users, would they even notice?

The good thing is if you follow naming convention for code commits and link your commits to types of changes, you can obtain historical data that will tell you how often bugs are being addressed in a particular fragment of your application. So you have the first part of risk equation. The problem is how you translate business value to code? How you decide what parts of code are most valuable and thus potentially causing most impact when something goes wrong?

One way of doing so would be to understand what features are most popular amongst your users, and what parts of the code are responsible for making those features accessible. As an example take a feature that is exercised by making a HTTP call to your REST API. Popularity of this feature can be understood as the number of requests made to particular REST API resource. The code that is involved in handling request to the resource, includes everything that gets called in your application on a request.

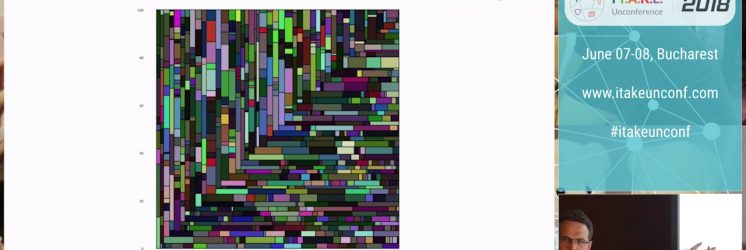

I combined multiple sources of data, such as historical data of bugs, production code meta information, access logs, code coverage reports, and came up with a report I was looking for. One that could tell me what tests I am missing, for what lines of code, based on their risk. This is the report I want to show you.

This presentation shares lessons learned and some ideas on how perfect coverage should look like taking under consideration risk. I will also discuss test impact analysis, one of great benefits of understanding your test coverage to optimize test runs and reduce the likelihood of hitting flaky test.

Video producer: http://itakeunconf.com/